Which optimizer doesn’t dream about a trouble-free testing cycle? From perfect hypothesis to beautiful design. An IT department that, hand in hand with quality assurance, provides error-free implementation and, after a short test runtime, a double-digit increase in sales figures appears. All of this, of course, without discussions with colleagues. problems with implementation or with quality assurance, and all of it in half the time. In case this doesn’t apply to you, welcome to reality.

In the following article I would like to give you tips for successful test implementation. These tips alone can prevent problems early-on and optimize the testing cycle.

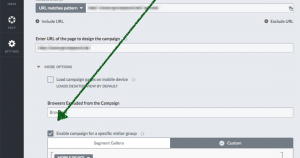

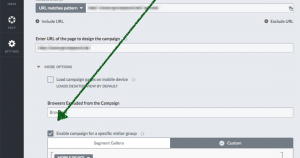

The somewhat hidden custom-targeting in Visual Website Optimizer. The upper target, for example, for the exclusion of mobile end-user devices. The lower target comprises a regular print out, whereby only desired browsers (whitelist) are permitted into the test.

The somewhat hidden custom-targeting in Visual Website Optimizer. The upper target, for example, for the exclusion of mobile end-user devices. The lower target comprises a regular print out, whereby only desired browsers (whitelist) are permitted into the test.

Source: http://www.w3schools.com/browsers/browsers_stats.asp

Source: http://www.w3schools.com/browsers/browsers_stats.asp

According to statistics from December 2014, of 1,000 visitors we had an allotment of 1.5% of visitors using IE 7 and 8. This corresponds to 15 visitors.

According to statistics from December 2014, of 1,000 visitors we had an allotment of 1.5% of visitors using IE 7 and 8. This corresponds to 15 visitors.

You will find a multitude of differently structured product detail pages on Amazon.

In case you are not familiar with all possible special cases, seek out colleagues who can further help you. Combine the corresponding special cases before the test is conceived and provide the appropriate information to development and quality assurance.

Should it be too late for this or if unexpected problems arise, you should take note of the following: Can individual categories or brands be tested for the time being? This will make the start of the test possible and provides a buffer for adjusting the test to your entire assortment.

You will find a multitude of differently structured product detail pages on Amazon.

In case you are not familiar with all possible special cases, seek out colleagues who can further help you. Combine the corresponding special cases before the test is conceived and provide the appropriate information to development and quality assurance.

Should it be too late for this or if unexpected problems arise, you should take note of the following: Can individual categories or brands be tested for the time being? This will make the start of the test possible and provides a buffer for adjusting the test to your entire assortment.

When enlarging thumbnails, it quickly becomes clear that image quality is no longer adequate.

When enlarging thumbnails, it quickly becomes clear that image quality is no longer adequate.

Reloaded content is not immediately available for manipulations. Whether this can lead to problems in the implementation of tests in this case must be checked in detail.

Take note of reloaded content and take development into account early on. The enlargement of product images as well as the addition of larger content (text or image) should be tested in advance in order to assure feasibility.

Reloaded content is not immediately available for manipulations. Whether this can lead to problems in the implementation of tests in this case must be checked in detail.

Take note of reloaded content and take development into account early on. The enlargement of product images as well as the addition of larger content (text or image) should be tested in advance in order to assure feasibility.

#1 No deployments during the test run

Changes or bug fixes, even if they appear small and simple, can lead to complications as the test plays-out. This is above all important for <tag> based testing tools. If there is a roadmap with a definite deployment schedule, tests can be carried out before or after. If a deployment is not to be impeded during a test runtime, talk to your developer ahead of time. With a copy of the running test and a play-out, e.g. onto a staging server, possible conflicts can be recognized early on and the test can be adjusted. You would like to estimate the test runtime? Our rule of thumb for an average test runtime:My colleague Manuel Brückmann gets into this topic in detail in his article Why you’re shutting down your A/B-test too soon.A test should run at least 2 – 4 weeks and contain at least 1,000 – 2,000 conversions per variation. Special events should take placed during this period (newsletter, TV-spots, sales, etc.). The greatest possible number of channels should be displayed (total traffic mix, either through targeting in the preliminary stage or segmentation in follow-up). If the test has reached a minimum statistical significance of 95% (two-tailed, that is positive as well as negative) and if this is stable, then the test can be stopped.

#2 Be aware of contrast

The combination of different adaptations often makes contrast-rich testing possible. Too high a contrast makes a subsequent analysis of the most effective leverage difficult, however. Additionally, expenses for implementation and quality assurance will rise. My recommendation would therefore be: Check a big adjustment in a first test. Undertake further adjustments in following sprints and validate.#3 Be aware of the worst-case scenario

Often, with a wide product assortment, there are divergent illustrations in the template, or the header changes through progression of the site, e.g. UVPs are presented bolder in the checkout. This can lead to bigger or smaller problems in development as well as in quality assurance. By setting-up a worst-case scenario in the preliminary stage (e.g. in the form of an impediment backlog) special cases can accordingly be observed as early as development. With this list, quality assurance can offer an optimal check of all possible scenarios. Ask your customers about special cases (special sizes, particular product categories such as, e.g., accessories, etc.). This makes it easier for both sides the intercept deviations.#4 Agility vs. perfection

Often, A/B tests are expected to be implemented to complete perfection. But after a number of weeks of tuning cycles, development, quality assurance and test runtime, however, the result is unsuccessful. We like to say,“a test is not a deployment”. Important: This is not intended to indicate a reduction in the quality of test implementation or quality assurance. Instead, the issue concerns implementing minimal details, the effect of which, however, are questionable on a visitor. Should you be confronted by this problem at the next occasion, simply pose the following question: “Would I not buy this product because, e.g., the font size, line spacing or margin width isn’t right?”#5 Be aware of lateral entry

A lateral entry into a test should always be noted. SEA, SEO or established customers should under circumstances be shown a changed page or be redirected to another page. In the former case, serious errors could arise, caused by the play-out of the test.#6 Take note of the test starting point and any discrepancy in the URL structure

A look should be given to a possible discrepancy in the URL structure, above all when carrying-out multi-page or funnel tests. Should the structure unexpectedly change, in the worst case the targeting of the testing tool might no longer be effective. This results in participants no longer being able to see the test variation. For external providers, for e.g. methods of payment, conversion and revenue can be lost – through a missing return path on the thank you page.#7 No test without targeting

Excluding certain devices / browsers is not necessary if quality assurance can guarantee error-free test play-out onto all end-user devices with the associated browsers. In the rarest of cases, however, is this actually possible. A whitelist, a listing of all end-user devices as well as browsers that are to be checked by quality assurance, can exclude potential sources of error. It is advantageous if this list is already defined before development release. The current hit rates from an existing web analytics / reporting tool, such as Google analytics, assist in this regard. The somewhat hidden custom-targeting in Visual Website Optimizer. The upper target, for example, for the exclusion of mobile end-user devices. The lower target comprises a regular print out, whereby only desired browsers (whitelist) are permitted into the test.

The somewhat hidden custom-targeting in Visual Website Optimizer. The upper target, for example, for the exclusion of mobile end-user devices. The lower target comprises a regular print out, whereby only desired browsers (whitelist) are permitted into the test.

#8 Exclusion from measurement

Who are the daily users on a site? Aside from your customers, these are often suppliers, such as call-centers or even colleagues in the office next door. But this can render your results erroneous. Therefore, exclude your own IP-address as well as that of internal and external employees from the measurement. Note: not every tool provides the opportunity to filter-out IP addresses from the results afterwards.#9 Browsers get old too

Older browsers, such as IE 7 and 8, die off. By looking at traffic numbers, older browsers can often be neglected. Based on experience, browsers such as IE 7 or IE 8 require enormous additional time in development and quality assurance. Source: http://www.w3schools.com/browsers/browsers_stats.asp

Source: http://www.w3schools.com/browsers/browsers_stats.asp

According to statistics from December 2014, of 1,000 visitors we had an allotment of 1.5% of visitors using IE 7 and 8. This corresponds to 15 visitors.

According to statistics from December 2014, of 1,000 visitors we had an allotment of 1.5% of visitors using IE 7 and 8. This corresponds to 15 visitors.

#10 Are iFrames available?

External service providers will gladly expand your site via functions. It can happen that these functions are implemented via an iFrame. iFrames can be manipulated if they are located in the same domain. If this is not the case, your testing tool must be implemented in the supplier domain. If you are lucky this is possible. In most cases, however, it is not. Check the concept together with your developer in advance for feasibility.#11 Take note of quality assurance expense

The time required for quality assurance is often underestimated. Typical, problematic cases involve tests on the product detail page or checkout. The expense can climb exponentially. Talk to your quality assurance in advance, in order not to be surprised. Here is an example of a simplified calculation for the time required for checkout tests: variations x Browser x Pages x Types of customers x Types of delivery x Types of payment x Special cases = Pages to be considered variationen x Browser x Seiten x Kundentypen x Lieferarten x Zahlungsarten x Sonderfälle = Zu betrachtende Seiten Pages to be considered x Time per page = Time needed by quality assurance A test with two variations for only four browsers, with five pages, three types of delivery and five types of payment corresponds to 600 pages to be investigated. At only two minutes per page the time used is 20 hours.#12 Is the product diversity known?

The product detail page frequently offers great optimization potential. From small adjustments to complete restructuring. Often, however, the quantity of products and their varying depictions (special cases) do no get attention. The result is necessary adjustments for development and additional expense for quality assurance. The consequence is a delay in the start of the test. You will find a multitude of differently structured product detail pages on Amazon.

In case you are not familiar with all possible special cases, seek out colleagues who can further help you. Combine the corresponding special cases before the test is conceived and provide the appropriate information to development and quality assurance.

Should it be too late for this or if unexpected problems arise, you should take note of the following: Can individual categories or brands be tested for the time being? This will make the start of the test possible and provides a buffer for adjusting the test to your entire assortment.

You will find a multitude of differently structured product detail pages on Amazon.

In case you are not familiar with all possible special cases, seek out colleagues who can further help you. Combine the corresponding special cases before the test is conceived and provide the appropriate information to development and quality assurance.

Should it be too late for this or if unexpected problems arise, you should take note of the following: Can individual categories or brands be tested for the time being? This will make the start of the test possible and provides a buffer for adjusting the test to your entire assortment.

#13 Take note of Ajax or the adjustment of product images

Not infrequently, changes are undertaken through dynamically reloaded content, e.g. information on inventory. But this is not always immediately obvious. In the best case, there is merely added expense for development, in the worst case implementation is not possible. When product images are being reduced or enlarged, the quality of the images must be noted. A pixelated image prevents optimal product illustration. When enlarging thumbnails, it quickly becomes clear that image quality is no longer adequate.

When enlarging thumbnails, it quickly becomes clear that image quality is no longer adequate.

Reloaded content is not immediately available for manipulations. Whether this can lead to problems in the implementation of tests in this case must be checked in detail.

Take note of reloaded content and take development into account early on. The enlargement of product images as well as the addition of larger content (text or image) should be tested in advance in order to assure feasibility.

Reloaded content is not immediately available for manipulations. Whether this can lead to problems in the implementation of tests in this case must be checked in detail.

Take note of reloaded content and take development into account early on. The enlargement of product images as well as the addition of larger content (text or image) should be tested in advance in order to assure feasibility.

#14 Conceal complex modifications through loading animations

A visitor may become unsettled as a result of visible modifications. In the worst case trust is lost and the visit is terminated. This effect can be reduced by using loading animation. The affected area is first faded out and provided with loading animation. This area becomes visible after all necessary manipulations are done. Modifications are thus at best rendered invisible and possibly increase the joy of use.#15 100% play-outs as an intermediate solution

Following a successful test, the adjustment that is made is often intended to be implemented on the site permanently as quickly as possible. Depending on the deployment cycle of an enterprise, however, this can easily take a couple of weeks to a month. 100% play-out represents a temporary bridge. As a rule, the costs of the testing tool are less than the increased turnover from play-out adaptation. In this way the increased turnover can be picked-up directly.Conclusion

As soon as the desire arises to test more than the red vs. the blue button, the entire process, from conceptualization right through to a finished test, becomes more complex. Unfortunately, this often goes unnoticed in practice. Many problems can be prevented by good preparation and communication with colleagues as early as before development. Be aware of the expense due to implementation and quality assurance, and hold consultations with your developer and quality assurance in due time. In this way, expenses and the possible occurrence of problems can be better assessed. Reference is often made to negative test results in order to generate improvements and learning. A test was carried out successfully? Many people will not obtain information on the test results. Relay the experience of success to all participants and thereby increase the motivation of the whole team. Celebrate the success together! What is your procedure in the development of tests? I would love to hear your feedback in the comments!Start structuring your Experiments today!

Try iridion for free as long as you want by signing up now!

Create your free Optimization Project